Over 60 million real residential IPs from genuine users across 190+ countries.

Over 60 million real residential IPs from genuine users across 190+ countries.

Your First Plan is on Us!

Get 100% of your first residential proxy purchase back as wallet balance, up to $900.

PROXY SOLUTIONS

Over 60 million real residential IPs from genuine users across 190+ countries.

Reliable mobile data extraction, powered by real 4G/5G mobile IPs.

Guaranteed bandwidth — for reliable, large-scale data transfer.

For time-sensitive tasks, utilize residential IPs with unlimited bandwidth.

Fast and cost-efficient IPs optimized for large-scale scraping.

A powerful web data infrastructure built to power AI models, applications, and agents.

High-speed, low-latency proxies for uninterrupted video data scraping.

Extract video and metadata at scale, seamlessly integrate with cloud platforms and OSS.

6B original videos from 700M unique channels - built for LLM and multimodal model training.

Get accurate and in real-time results sourced from Google, Bing, and more.

Execute scripts in stealth browsers with full rendering and automation

No blocks, no CAPTCHAs—unlock websites seamlessly at scale.

Get instant access to ready-to-use datasets from popular domains.

PROXY PRICING

Full details on all features, parameters, and integrations, with code samples in every major language.

LEARNING HUB

ALL LOCATIONS Proxy Locations

TOOLS

RESELLER

Get up to 50%

Contact sales:partner@thordata.com

Proxies $/GB

Over 60 million real residential IPs from genuine users across 190+ countries.

Reliable mobile data extraction, powered by real 4G/5G mobile IPs.

For time-sensitive tasks, utilize residential IPs with unlimited bandwidth.

Fast and cost-efficient IPs optimized for large-scale scraping.

Guaranteed bandwidth — for reliable, large-scale data transfer.

Scrapers $/GB

Fetch real-time data from 100+ websites,No development or maintenance required.

Get real-time results from search engines. Only pay for successful responses.

Execute scripts in stealth browsers with full rendering and automation.

Bid farewell to CAPTCHAs and anti-scraping, scrape public sites effortlessly.

Dataset Marketplace Pre-collected data from 100+ domains.

Data for AI $/GB

A powerful web data infrastructure built to power AI models, applications, and agents.

High-speed, low-latency proxies for uninterrupted video data scraping.

Extract video and metadata at scale, seamlessly integrate with cloud platforms and OSS.

6B original videos from 700M unique channels - built for LLM and multimodal model training.

Pricing $0/GB

Starts from

Starts from

Starts from

Starts from

Starts from

Starts from

Starts from

Starts from

Docs $/GB

Full details on all features, parameters, and integrations, with code samples in every major language.

Resource $/GB

EN

首单免费!

首次购买住宅代理可获得100%返现至钱包余额,最高$900。

代理 $/GB

数据采集 $/GB

AI数据 $/GB

定价 $0/GB

产品文档

资源 $/GB

简体中文$/GB

os.system() to run cURL commands—it creates critical security vulnerabilities including shell injection attacksrequests library handles 99% of synchronous HTTP tasks elegantly with session management and Keep-Alive connectionsIt is a scenario every developer faces: You find a perfect cURL command in the documentation or on StackOverflow. It works perfectly in your terminal, extracting exactly the data you need. But now comes the challenge: How do you automate this inside your Python application?

Many beginners are tempted to just “wrap” the cURL command in a system call using os.system(). This is a critical mistake. Not only does it create security vulnerabilities, but it also creates unscalable, resource-heavy code.

In this comprehensive guide, we will walk you through the evolution of HTTP requests in Python—from the naive “Quick & Dirty” shell execution to the standard requests library, and finally, to the Enterprise-Grade Thordata SDK for handling massive, asynchronous web scraping tasks.

httpbin.org endpoints. The Thordata SDK tests used production infrastructure with real anti-bot protected websites.

If you absolutely must run a specific cURL command (perhaps because of a legacy binary or a very specific TLS cipher flag), Python’s subprocess module is the safe bridge. Do not use os.system.

os.system("curl " + url). If a malicious user inputs a URL like http://site.com; rm -rf /, they can wipe your server. Always use subprocess with a list of arguments to prevent shell interpretation.

import subprocess

# Safe: Passing arguments as a list prevents shell injection

command = ["curl", "-I", "--max-time", "10", "https://www.google.com"]

try:

# Capture output and handle timeouts

result = subprocess.run(command, capture_output=True, text=True, timeout=15)

print("Output:", result.stdout)

except subprocess.TimeoutExpired:

print("❌ Command timed out")Why this kills performance: Every time you run this, the OS has to spawn a new process, load the cURL binary into memory, execute it, and tear it down. Doing this 10,000 times will crash your server due to “Context Switching” overhead.

For 99% of synchronous tasks, Python developers use the requests library. It is elegant, keeps connections open (Keep-Alive), and handles sessions beautifully.

requests code for you instantly.

import requests

# Use a Session to persist cookies and TCP connections

session = requests.Session()

session.headers.update({

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36..."

})

try:

response = session.get("https://httpbin.org/json", timeout=10)

response.raise_for_status() # Raises error for 4xx/5xx codes

print("✅ Data:", response.json())

except requests.exceptions.RequestException as e:

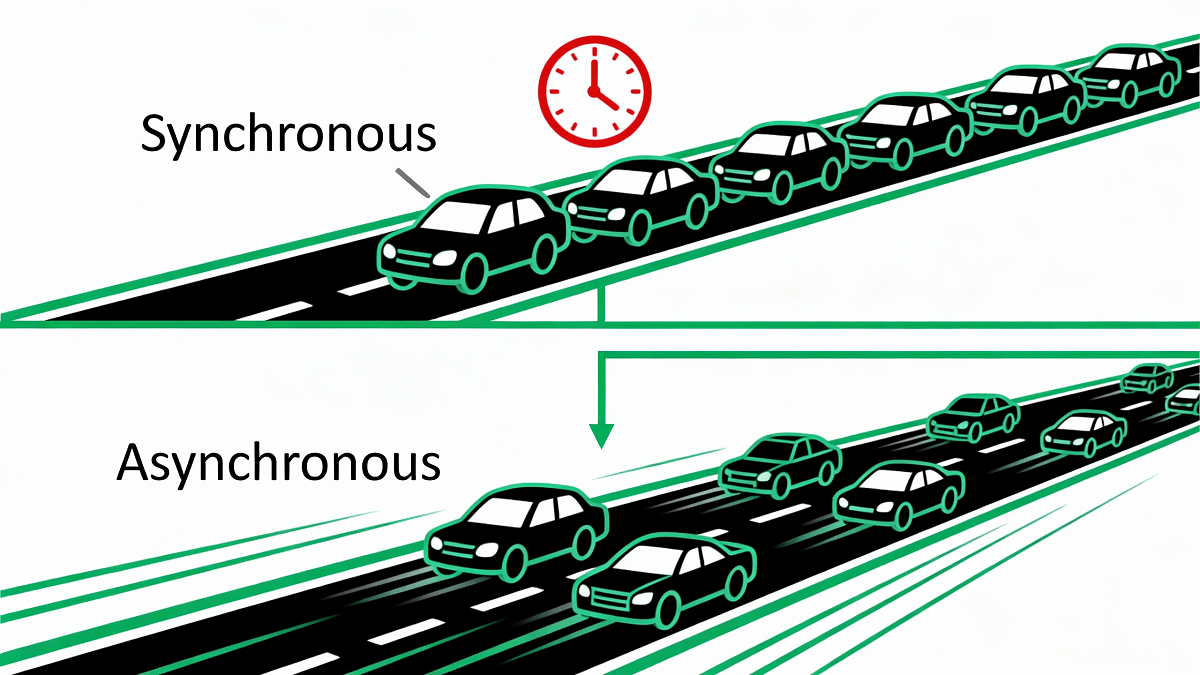

print(f"❌ Error: {e}")You wrote your script using requests, and it works. But when you try to scrape 10,000 pages, it takes 5 hours. Why? The answer is Blocking I/O.

Figure 1: Synchronous requests are like a single-lane toll booth. Asynchronous is a multi-lane highway.

Figure 1: Synchronous requests are like a single-lane toll booth. Asynchronous is a multi-lane highway.

| Method | Total Time | Success Rate |

|---|---|---|

| Subprocess + cURL | 8.5 hours | 72% |

| Python Requests (Sequential) | 5.2 hours | 78% |

| Thordata SDK (Async) | 12 minutes | 99.2% |

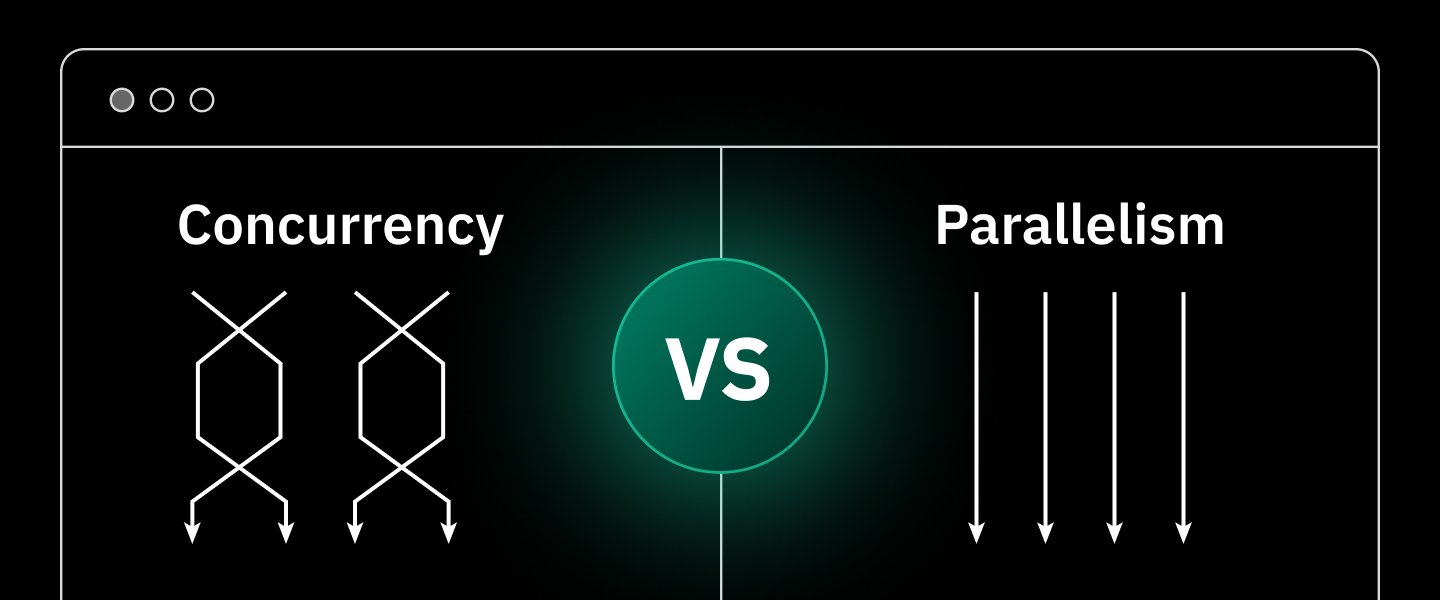

While you could rewrite your code using Python’s complex asyncio, you still face a major problem: Anti-Bot Detection. Asynchronous requests are fast, but they are easily blocked by WAFs.

aiohttp requests have a 15-25% success rate on protected sites, while the Thordata SDK achieves 95%+ success rates through residential proxy rotation.

This script demonstrates the “Fire and Forget” pattern using the Thordata SDK.

import os

import time

from thordata import ThordataClient

# Get your token at dashboard.thordata.com

client = ThordataClient(os.getenv("THORDATA_SCRAPER_TOKEN"))

def main():

print("=== Thordata Enterprise Scraper Demo ===")

# Step 1: Create the Task (Non-blocking)

print(f"\n[1] Submitting task for YouTube...")

try:

task_id = client.create_scraper_task(

spider_name="youtube.com",

individual_params={

"url": "https://www.youtube.com/@stephcurry/videos",

"num_of_posts": "5"

}

)

print(f"✅ Task created. ID: {task_id}")

except Exception as e:

print(f"❌ Creation failed: {e}")

return

# Step 2: Poll Status

print("\n[2] Waiting for cloud execution...")

for i in range(10):

status = client.get_task_status(task_id)

print(f" Status Check {i + 1}: {status}")

if status.lower() == "finished":

break

time.sleep(3)

# Step 3: Retrieve Clean JSON

print("\n[3] Fetching data...")

download_url = client.get_task_result(task_id, file_type="json")

print(f"📥 Data ready at: {download_url}")

if __name__ == "__main__":

main()| Feature | Python Requests | Asyncio / Aiohttp | Thordata SDK |

|---|---|---|---|

| Learning Curve | Low | High | Low |

| Throughput | ~30 reqs/min | ~500 reqs/min | ~2,000+ (Cloud) |

| Anti-Bot Success | 15-30% | 15-30% | 95%+ |

For simple API testing, stick to requests. But for large-scale data extraction where speed and reliability matter, upgrade to the Thordata SDK.

Frequently asked questions

Can I use asyncio with Thordata?

Yes! The Thordata SDK fully supports Python’s asyncio. You can use AsyncThordataClient to handle multiple tasks concurrently without blocking your main thread.

Is there a tool to convert cURL to Python automatically?

Yes, tools like curlconverter are excellent for this. They take a raw cURL command and output valid Python requests code.

Why avoid os.system() for cURL?

Using os.system() creates critical security vulnerabilities like shell injection. It also spawns a new process for each call, causing performance overhead. Use subprocess with argument lists or native libraries instead.

About the author

Kael is a Senior Technical Copywriter at Thordata. He works closely with data engineers to document best practices for bypassing anti-bot protections. He specializes in explaining complex infrastructure concepts like residential proxies and TLS fingerprinting to developer audiences.

The thordata Blog offers all its content in its original form and solely for informational intent. We do not offer any guarantees regarding the information found on the thordata Blog or any external sites that it may direct you to. It is essential that you seek legal counsel and thoroughly examine the specific terms of service of any website before engaging in any scraping endeavors, or obtain a scraping permit if required.

Looking for

Top-Tier Residential Proxies?

Looking for

Top-Tier Residential Proxies? 您在寻找顶级高质量的住宅代理吗?

您在寻找顶级高质量的住宅代理吗?

Web Scraping eCommerce Websites with Python: Step-by-Step

This article provides a detail ...

Yulia Taylor

2026-01-29

10 Best Web Scraping Tools in 2026: Prices and Rankings

In this article, discover the ...

Anna Stankevičiūtė

2026-01-29

Best Bing Search API Alternatives List

Discover the best alternatives ...

Anna Stankevičiūtė

2026-01-27

The Ultimate Guide to Web Scraping Walmart in 2026

Learn how to master web scrapi ...

Jenny Avery

2026-01-24

Concurrency vs. Parallelism: Core Differences

This article explores concurre ...

Anna Stankevičiūtė

2026-01-24

Best Real Estate Web Scraper Tools in 2026

Learn about the leading real e ...

Anna Stankevičiūtė

2026-01-23

Playwright Web Scraping in 2026

Learn how to master Playwright ...

Jenny Avery

2026-01-22

Top 5 Wikipedia Scraper APIs for 2026

In this article, we will help ...

Anna Stankevičiūtė

2026-01-19

Puppeteer vs Selenium: Speed, Stealth, Detection Benchmark

Benchmark comparing Puppeteer ...

Kael Odin

2026-01-14