Over 60 million real residential IPs from genuine users across 190+ countries.

Over 60 million real residential IPs from genuine users across 190+ countries.

Your First Plan is on Us!

Get 100% of your first residential proxy purchase back as wallet balance, up to $900.

PROXY SOLUTIONS

Over 60 million real residential IPs from genuine users across 190+ countries.

Reliable mobile data extraction, powered by real 4G/5G mobile IPs.

Guaranteed bandwidth — for reliable, large-scale data transfer.

For time-sensitive tasks, utilize residential IPs with unlimited bandwidth.

Fast and cost-efficient IPs optimized for large-scale scraping.

A powerful web data infrastructure built to power AI models, applications, and agents.

High-speed, low-latency proxies for uninterrupted video data scraping.

Extract video and metadata at scale, seamlessly integrate with cloud platforms and OSS.

6B original videos from 700M unique channels - built for LLM and multimodal model training.

Get accurate and in real-time results sourced from Google, Bing, and more.

Execute scripts in stealth browsers with full rendering and automation

No blocks, no CAPTCHAs—unlock websites seamlessly at scale.

Get instant access to ready-to-use datasets from popular domains.

PROXY PRICING

Full details on all features, parameters, and integrations, with code samples in every major language.

LEARNING HUB

ALL LOCATIONS Proxy Locations

TOOLS

RESELLER

Get up to 50%

Contact sales:partner@thordata.com

Proxies $/GB

Over 60 million real residential IPs from genuine users across 190+ countries.

Reliable mobile data extraction, powered by real 4G/5G mobile IPs.

For time-sensitive tasks, utilize residential IPs with unlimited bandwidth.

Fast and cost-efficient IPs optimized for large-scale scraping.

Guaranteed bandwidth — for reliable, large-scale data transfer.

Scrapers $/GB

Fetch real-time data from 100+ websites,No development or maintenance required.

Get real-time results from search engines. Only pay for successful responses.

Execute scripts in stealth browsers with full rendering and automation.

Bid farewell to CAPTCHAs and anti-scraping, scrape public sites effortlessly.

Dataset Marketplace Pre-collected data from 100+ domains.

Data for AI $/GB

A powerful web data infrastructure built to power AI models, applications, and agents.

High-speed, low-latency proxies for uninterrupted video data scraping.

Extract video and metadata at scale, seamlessly integrate with cloud platforms and OSS.

6B original videos from 700M unique channels - built for LLM and multimodal model training.

Pricing $0/GB

Starts from

Starts from

Starts from

Starts from

Starts from

Starts from

Starts from

Starts from

Docs $/GB

Full details on all features, parameters, and integrations, with code samples in every major language.

Resource $/GB

EN

首单免费!

首次购买住宅代理可获得100%返现至钱包余额,最高$900。

代理 $/GB

数据采集 $/GB

AI数据 $/GB

定价 $0/GB

产品文档

资源 $/GB

简体中文$/GB

Blog

Scraper<–!>

<–!>

Wikipedia is the largest online information repository in the world today, its vast amount of structured information is an invaluable resource for researchers, developers, and businesses. However, when you need to obtain this data on a large scale and automatically, simple manual copying will not meet the demand at all, at this point, you need an efficient and reliable solution. While programming languages like Python, combined with the Requests and BeautifulSoup libraries, are common choices, this often requires you to personally handle complex technical issues such as request management, IP rotation, parsing logic, and anti-bot evasion.

In this article, we will help you find the best alternatives for scraping Wikipedia data, using efficient Wikipedia Scraper APIs to avoid the risks of being blocked, to directly obtain cleaned and structured Wikipedia data.

A Wikipedia Scraper is an automated program specifically designed to extract unstructured HTML data from Wikipedia pages and convert it into a structured format. It is essentially an automated proxy, simulating human browsing behavior to access specific Wikipedia pages, identifying and extracting the required information—such as article titles, main content, infobox data, categories, citations, historical versions, and multimedia links—and then converting this unstructured HTML content into a machine-readable and highly structured format, such as JSON or CSV.

Unlike the official API, which has many limitations, high-performance Web Scraping Wikipedia tools typically integrate dynamic rendering capabilities, capable of handling asynchronous loading content in modern web pages, and use residential proxies to simulate real human behavior, thereby ensuring the stealth and stability of the scraping process.

The application scope of a Wikipedia scraper goes far beyond simple data collection, and it plays an irreplaceable role in providing data support across multiple cutting-edge fields, developers and businesses typically rely heavily on these tools in the following scenarios:

• Creating high-quality training corpora for natural language processing (NLP) and machine learning models.

• Building knowledge graphs that connect entities and relationships, driving semantic search and recommendation systems.

• Conducting large-scale content analysis and trend studies, such as tracking the evolution of specific topics across different language versions.

• Providing real-time, accurate fact-checking and data support for applications, websites, or internal tools.

• Cross-analyzing and integrating Wikipedia information with other data sources (such as news, social media, and academic databases).

• Automating the monitoring of updates for specific industry entries to capture competitors’ latest technological developments or brand evolutions.

• Utilizing the scraped structured data to provide an underlying knowledge base support for question-answering systems or intelligent assistants in vertical fields.

Obtaining data from Wikipedia primarily falls into two technical approaches: building custom script tools and integrating API services, Each approach has its own advantages and disadvantages in terms of cost and efficiency. We can choose between traditional Python libraries, browser plugins, and professional web scraper api based on the project’s scale, budget, and technical expertise.

Using Python libraries to build crawlers is the most common foundational development method for technical teams, It provides a high degree of customization. By combining Requests and BeautifulSoup, you can have complete control over the scraping logic and accurately parse specific HTML tags.

1. pros:

• Completely free and open-source toolchains can significantly reduce the initial R&D investment for the project.

• Developers can flexibly adjust scraping strategies and specific data cleaning rules according to business needs.

2. cons:

• High-concurrency scraping can easily trigger Wikipedia’s firewall, resulting in the local IP being permanently banned.

• Maintenance costs are very high because slight changes in webpage structure can render parsing scripts completely ineffective.

3. Target Audience:

• Individual developers or lab researchers with solid programming foundations and small scraping needs.

Visual browser extensions provide a simple way for non-programmers to extract data through a click interface. These tools usually exist as plugins, allowing you to directly define scraping rules and export results within the browser.

1. pros:

• You can complete the entire process from locating page elements to exporting data without writing any code.

• It supports instant previews of scraping results, allowing users to quickly correct deviations in collection rules.

2. cons:

• Due to browser performance limitations, large-scale and automated batch task processing cannot be achieved.

• It is difficult to handle complex anti-scraping mechanisms and cannot efficiently integrate automatic proxy switching features.

3. Target Audience:

• Marketers or content operators who occasionally need to obtain small amounts of tabular data for analysis.

A professional Scraper API is a highly encapsulated cloud solution that can automatically handle all underlying technical challenges. This service returns the desired data through simple API calls, completely eliminating the hassle of managing servers and proxy pools.

1. pros:

• Automatically handling CAPTCHA recognition and complex IP rotation mechanisms ensures a scraping success rate close to 100%.

• Providing structured JSON data output significantly reduces the time costs required for data post-processing.

2. cons:

• It requires paying certain service fees based on the number of requests or data flow, making it unsuitable for zero-budget projects.

3. Target Audience:

• Large enterprises and rapidly growing startups with extremely high demands for data quality and collection stability.

Before undertaking any scale of scraping activities, we must deeply understand Wikipedia’s guidelines, to ensure that data acquisition activities are both compliant and sustainable. Although Wikipedia’s content is publicly available under Creative Commons licenses, this does not mean you can indiscriminately send high-frequency requests to its servers.

Wikipedia’s robots.txt file and terms of use set clear rules for automated access, citing the perspective mentioned by Proxyway in their annual report: Respecting the target website’s robots.txt file and rate limits during web scraping is fundamental to maintaining the health of the industry ecosystem. We recommend clearly identifying the User-Agent information in your request headers, and to perform large-scale collection tasks during off-peak hours, to reduce excessive occupation of public resources.

Legal compliance primarily depends on how you handle and use the scraped data. As long as you do not obtain non-public personal sensitive information through scraping Wikipedia, and the purpose of using the data aligns with the principle of “fair use,” you generally will not face legal risks. However, if you plan to use this data as a core content library for commercial products, you must strictly adhere to Wikipedia’s attribution requirements. By using legitimate Wikipedia Web Scraper API providers, you can typically obtain a more standardized data delivery pipeline, thereby avoiding legal disputes caused by improper scraping.

Amid the many Scraper API providers in the market, making a choice can be dazzling. The key to selection lies in accurately matching your project needs with the core capabilities of the provider, the following indicators should be prioritized before making a decision:

• Proxy Network: Examine whether they have a large, globally distributed residential proxy pool to avoid complex geographic bans.

• Data Quality and Structure: Can the provider return deep, clean, and consistently structured data?

• Reliability and Uptime: Is the service availability close to 100%? This is crucial for production environment applications.

• Usability and Documentation: Is the API intuitively designed, with clear, comprehensive documentation and practical code examples?

• Scalability and Rate Limits: Can it easily handle scale variations from a few requests per second to thousands of requests per second?

• Pricing and Cost Transparency: Is the pricing model clear? Are there flexible free trials or packages available?

• Compliance and Support: Does the provider clearly support compliant scraping and offer timely technical support?

Thordata offers a powerful and focused Wikipedia API interface, its design philosophy is “to provide developers with precise and comprehensive data tools like a Swiss Army knife.” Their API is not limited to extracting basic text, but can also deeply scrape key-value pairs from infoboxes, article category trees, internal link networks, and even metadata of historical revisions, which is crucial for building complex knowledge applications.

We used Web Scraper API to initiate a composite data request to Wikipedia that included image URLs, infoboxes, and summaries. The test target was an entry of medium length, with the API’s end-to-end response time stabilizing around 1200 milliseconds, the returned JSON structure was clear and hierarchical, and the image links directly pointed to Wikipedia’s static resource addresses, requiring no additional cleaning for immediate use, demonstrating its efficiency and stability.

As a giant in the web scraping field, Brightdata offers a comprehensive solution that covers all websites, including optimized support for Wikipedia. Its strength lies in having one of the largest and most compliant proxy networks in the world. This means that even when facing the strictest access controls, its API can guarantee a very high success rate. Bright Data’s Wikipedia scraper can be configured to extract specific templates or sections, and integrated into its powerful data collection workflows, making it suitable for large enterprise users that need to combine Wikipedia data with other sources.

Apify is renowned for its powerful scraper platform and a rich variety of pre-built “Actors.” Its Wikipedia Scraper Actor is one of the most popular tools on the platform. Its main advantages are ease of use and flexibility—you hardly need to write any code; you can simply configure inputs and run them directly in Apify’s web console, and the results can be stored in Apify’s datasets, or delivered directly through webhooks, APIs, and various cloud storage options. For teams wanting to quickly launch projects and requiring robust task scheduling and result management features, Apify is a good choice.

Oxylabs is another premium web scraper API provider aimed at enterprise customers. Its Wikipedia scraping API emphasizes scalability and reliability, capable of handling vast and concurrent data requests. This service includes an intelligent rotating proxy server system, ensuring stable connections and data freshness. The response format provided by Oxylabs is highly structured, and their support team is recognized by enterprise clients for being professional and responsive, making it particularly suitable for large organizations that require continuous 24/7 data pipelines.

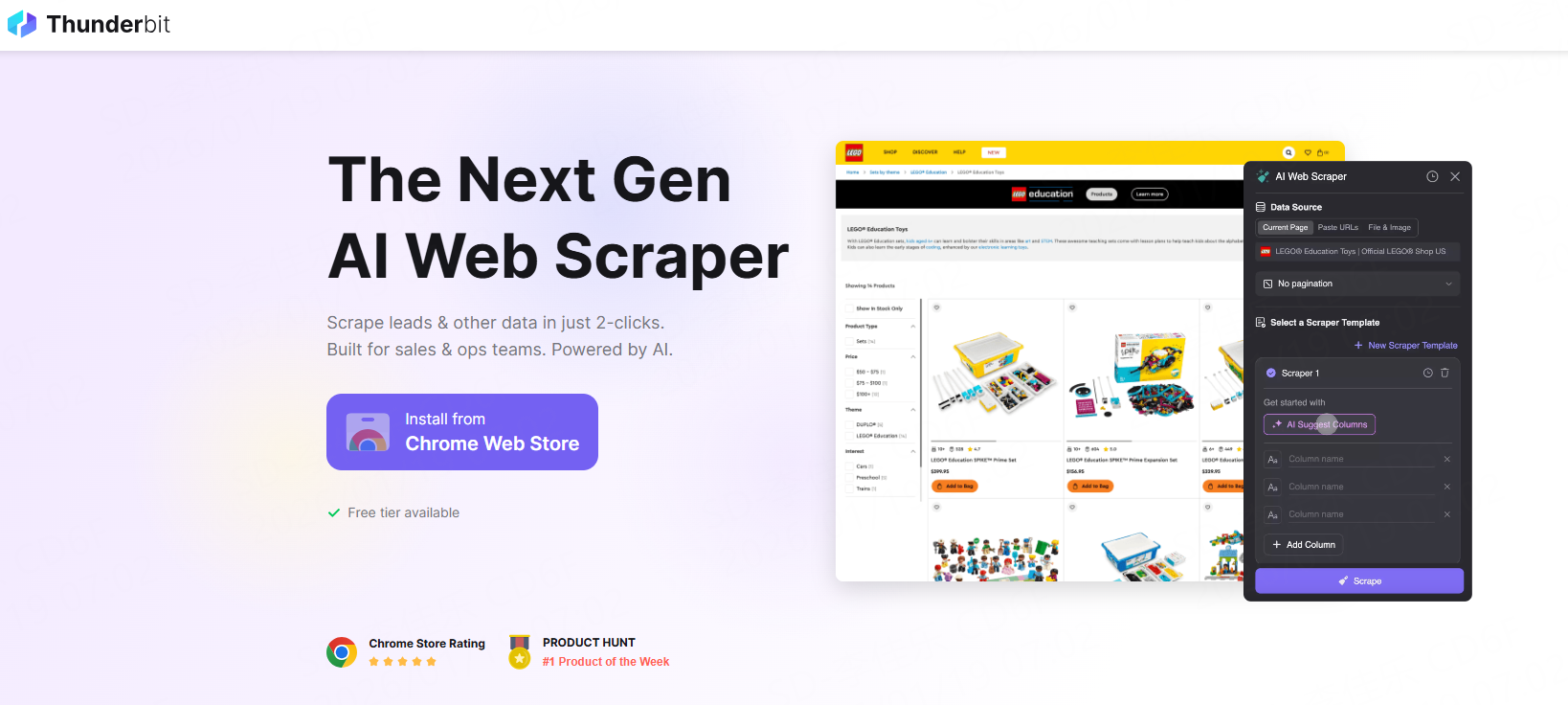

Thunderbit AI Scraper represents the trend of the next generation of intelligent scraping tools. It is not just a simple parser, but also integrates AI capabilities to handle more complex extraction tasks. For example, it can understand the semantics of a page, even if the layout of the article changes or uses non-standard templates, it can relatively accurately scrape data through natural language instructions. This is especially useful for scraping pages in Wikipedia that have less fixed structures. Although it may not be as fast as traditional APIs in purely structured data extraction, its flexibility and ability to handle unstructured information are unique selling points.

To make it easier for you to compare the core metrics of different providers, we have compiled the table below for your reference:

| Provider | Bulk Request Support | Proxy Support | Data Format | Delivery Options | Free Trial | Starting Price |

| Thordata | ✔️ | ✔️ | JSON/CSV/XLSX | Amazon S3/Snowflake/Webhooks | ✔️(5,000 Credits – 7 Days Free Trial) | $30/month |

| Brightdata | ✔️ | ✔️ | JSON/CSV | Webhook/API | ❌ | $499/month |

| Apify | ✔️ | ✔️ | JSON/Excel | Webhook/API | ✔️ | $29/month |

| Oxylabs | ✔️ | ✔️ | HTML/JSON/PNG | Amazon S3/GCS | ✔️ | $49/month + VAT |

| Thunderbit | ✔️ (Limited) | ✔️ (Basic) | JSON | Google Sheets/Airtable/Notion | ❌ | $15/month |

Disclaimer: The information in the table above is based on the official statements of each provider for 2026. The features, pricing strategies, and package contents of API services are subject to change at any time. We cannot guarantee the absolute timeliness and complete accuracy of the information. Before making a final buy, we strongly recommend that you directly visit the official websites of each provider, to verify the latest product details, service terms, and pricing information.

Despite the fact that modern APIs have greatly simplified the process, we still face tricky challenges such as CAPTCHA pop-ups and dynamic loading when handling vast amounts of Wikipedia data. If these issues are not handled properly, they can cause data collection projects to get stuck after running for hours or return a lot of error messages.

Challenge 1: IP Banning Due to Anti-Scraping Measures

Wikipedia protects its servers from malicious attacks with strict rate limits, and once an unusual request frequency is detected for a single IP, it will be immediately blocked. This frequency-based detection is one of the most common obstacles in web scraping, especially if you do not have a high-quality proxy pool configured.

• Solution: Using residential proxies with automatic rotation can distribute requests across real user IPs around the world, thereby simulating natural browsing patterns.

Python

# Example code: Using the Requests library with proxy support (for conceptual demonstration)

import requests

# The URL here is typically the endpoint provided by the Scraper API provider

api_endpoint = "https://api.scraperprovider.com/scrape"

params = {

"api_key": "YOUR_ACTUAL_KEY",

"url": "https://en.wikipedia.org/wiki/Web_scraping",

"proxy_type": "residential"

}

response = requests.get(api_endpoint, params=params)

if response.status_code == 200:

print("Data collection successful:", response.json())

Challenge 2: Handling JavaScript Rendered Interactive Content

Some pages on Wikipedia contain tables or collapsible panels dynamically generated by JavaScript, which simple HTML parsers cannot retrieve. This requires tools to have the capability to execute page scripts, otherwise, the scraping results will be incomplete.

• Solution: By integrating headless browser technology or directly using a Wikipedia Scraper API that supports JS rendering to obtain the fully rendered DOM.

javascript

// Example code: Using simple logic to determine if dynamic content needs to be processed

async function fetchWikiData(url) {

// Assuming using some API that supports rendering

const response = await fetch(`https://api.thordata.com/v1/render?url=${url}`);

const data = await response.json();

// Check if specific dynamic elements, such as InfoBox, have been scraped

if (data.content.includes('class="infobox"')) {

return "Complete data has been obtained";

}

return "Rendering needs to be retried";

}

Challenge 3: Parsing Non-Standardized HTML Structures

Since Wikipedia entries are edited by thousands of contributors, the HTML tag nesting logic on different pages may have subtle differences. This inconsistency makes it extremely difficult and fragile to write generic regular expressions or CSS selectors.

• Solution: Using a machine learning-supported parsing engine can automatically recognize the main content of the page, by delegating the scrape wiki task to an API with semantic analysis capabilities, you can obtain cleaned standard fields without worrying about changes in the underlying HTML.

Choosing the right Wikipedia Scraper API is not just about obtaining data, but also about maintaining a competitive edge in the rapidly changing technological landscape of 2026. We clearly see that from manual operations to building custom crawlers, to using generic or specialized scraper apis, each method has its clear positioning. For the vast majority of users seeking efficiency, reliability, and data quality, investing in a professional Wikipedia Scraper API is undoubtedly a wise choice. Whether you need to enrich your AI model's knowledge base through scrape Wikipedia, or conduct in-depth market insights, mastering these advanced tools will make your data-driven decision-making process more comfortable. Now, are you ready to say goodbye to cumbersome low-level coding, and embrace a new era of efficient and intelligent automated data collection?

We hope the information provided is helpful. However, if you have any further questions, feel free to contact us at support@thordata.com or via online chat.

<--!>

Frequently asked questions

Is API scraping legal?

As long as you comply with the target website's terms of service, robots.txt rules, and relevant copyright laws (such as Creative Commons licenses), and use compliant methods (such as adding delays and respecting rate limits), using an API for scraping is generally legal. The key is “responsibility” and “moderation.”

Is the Wikipedia API free?

Yes, the MediaWiki API and REST API provided by Wikipedia are largely free, but there are strict rate limits (typically 100-200 requests per minute), and the returned data structure may not fully meet deep scraping needs.

Can ChatGPT create a Wikipedia page?

Technically, ChatGPT can generate text in a style similar to Wikipedia. However, actual creation or editing of Wikipedia pages must strictly adhere to Wikipedia’s three core guidelines: no original research, neutral point of view, and verifiability, and must be reviewed and submitted by human editors. Automated page creation usually violates Wikipedia's policies and can lead to bans.

Are you allowed to web scrape Wikipedia?

Yes, but it must meet certain conditions. Wikipedia's robots.txt file is open to most crawlers, but explicitly prohibits certain directories. Most importantly, you must adhere to rate limits, identify yourself with a friendly user agent, and not overload the server. Caution is especially needed for large-scale commercial scraping.

<--!>

About the author

Anna is a content specialist who thrives on bringing ideas to life through engaging and impactful storytelling. Passionate about digital trends, she specializes in transforming complex concepts into content that resonates with diverse audiences. Beyond her work, Anna loves exploring new creative passions and keeping pace with the evolving digital landscape.

The thordata Blog offers all its content in its original form and solely for informational intent. We do not offer any guarantees regarding the information found on the thordata Blog or any external sites that it may direct you to. It is essential that you seek legal counsel and thoroughly examine the specific terms of service of any website before engaging in any scraping endeavors, or obtain a scraping permit if required.

Looking for

Top-Tier Residential Proxies?

Looking for

Top-Tier Residential Proxies? 您在寻找顶级高质量的住宅代理吗?

您在寻找顶级高质量的住宅代理吗?

Best Real Estate Web Scraper Tools in 2026

Learn about the leading real e ...

Anna Stankevičiūtė

2026-01-23

Playwright Web Scraping in 2026

Learn how to master Playwright ...

Jenny Avery

2026-01-22

Puppeteer vs Selenium: Speed, Stealth, Detection Benchmark

Benchmark comparing Puppeteer ...

Kael Odin

2026-01-14